I wondered what it would sound like if I treated audio like 2D texture data and blurred it. In one dimension it’s basically just a low pass filter, but in two dimensions it turns into some kind of weird flanger / comb filter thing.

Here’s a video demo: https://youtu.be/izN-dVmpV9s

And here’s where you can install it: https://www.rebeltech.org/patch-library/patch/_Lich_Gaussian_Blur_2D

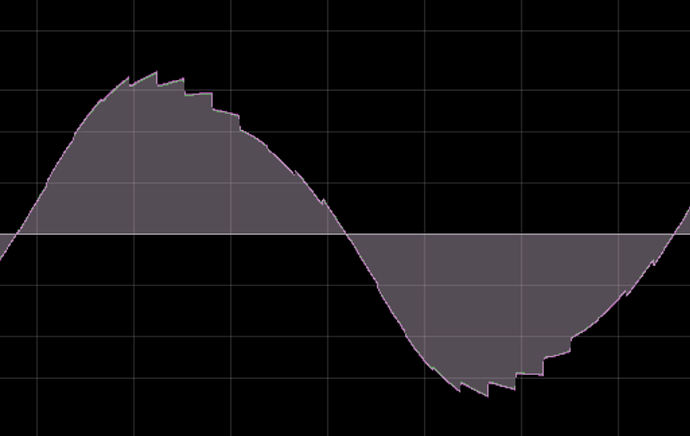

As I was making the demo video I noticed some crunchiness in the sound, so there may still be some work to do here. It becomes particularly pronounced when processing sustained chords. In order to get texture size changes without clicks, I’m blurring at two adjacent texture sizes and then blending them, which meant I had to do the processing at half the sample rate in order for it to run on the Lich. It’s maybe this down/upsampling that’s part of the problem?