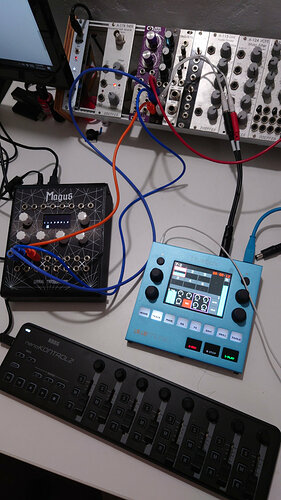

I have a 3-voice paraphonic modular voice called a “chainsaw”. It has 3 pitch inputs. I would like to use the Magus to set the pitches (either as a Midi-to-CV bridge, or as a sequencer) so I can play chords. Because the Magus only has the two audio outs, I am trying to use the parameter outs for this. The chords sound really, really bad. The notes do not sound like they are harmonizing. I have done some more careful testing with this. (There was a little bit of discussion about this in the magus build thread.)

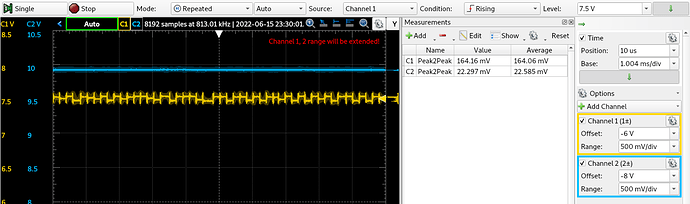

What I conclude is that the parameter outs are just very noisy. I did a test with a Korg microKEY Air connected to the magus, the magus plugged into wall power (and into a mac via USB simultaneously). I fed the Magus into the Chainsaw v/o 1 input, the Chainsaw Out L output into a Mordax data in tuner mode, and the buffer re-output of the mordax data into my audio interface. The chainsaw is set to a simple square wave. The audio volume on the Magus is 127 and the Magus was running v21. This is the file I recorded:

There are four sections in this file. In each I alternate two nearby notes, something like a D4 and an F4.

In the first section, I disconnected the Magus and drove the pitch CV from a Korg SQ-1.

In the second section I drove the pitch CV from the audio output of the Magus running my Midi2CVTriplet patch which if you play one note at a time sends incoming notes as CV to both the audio out and to a parameter patch point.

In the third section, with the same patch, I drove the pitch CV from the parameter output of the Magus.

In the fourth section I again drove the pitch CV from the parameter output of the Magus but I played one octave higher (the problem is easier to hear there).

From the audio, you can clearly hear that the pure tone sounds pretty bad in sections 3 and 4. Looking at the tuner, what I found was that the Korg SQ-1 held the note but varied about 2/10 of a hz over time. The audio output of the Magus held the note and varied 1/10 of a hz over time, so actually better than the SQ-1. The parameter output varied by about 4 hz. At lower frequencies that would be a whole semitone.

(In addition, there seems to be a slight glide on the parameter version which is not present on the audio out; and there was a very weird problem I have never seen before today, where when I disconnected the patch cable from the audio out and moved it to the parameter, the MIDI USB of the microKey AIR disconnected. When this happened I could only get MIDI back by rebooting the Magus. I did not see this when I was running 732ceac17cb-merge-a447765258d9 so maybe this is a regression in v21.)

I am trying to understand if there is anything I can do about this. What exactly is the difference between the parameter and audio outs?

Is the difference a software difference? That is, if I could somehow get the Magus software to configure more ports as audio rather than parameters-- have it do 4 channel audio, for example-- would I get the more stable voltage out?

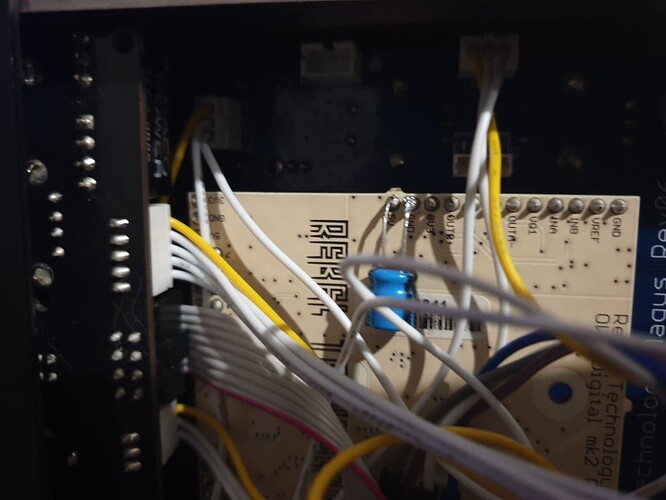

Is it a hardware difference? If the hardware is different is there some way hypothetical way to mod it to get more stable outs? In a worst case I guess could I add a low pass pass filter with the right resistor and capacitor, either on a breadboard or as a modification to the device? (Funnily this would leave me in the same place with the Magus as with my Arduino, which has PWM out…)

I am set on doing this with the Magus because it is the only CV sequencing device I have with three outputs. The SQ-1 for example has only two outputs.